Hi, I'm James C. Fey

I am a Postdoc at Malmö University researching, exploring, identifying, and reflecting on academic writing conventions within scientific communities. Currently I'm also researching ghost hunting and exploring the use of larps for community building and social engagement to rethink futures.

I did my Ph.D. research in the Social Emotional Technology Lab in the Computational Media Department at UCSC. There I explored applications of social wearable technology, maker kits, and DIY learning to support social interactions through educational live-action roleplay (edu-larp). I've been a visiting researcher at the Interaction Lab at the University of Saskatchewan. I've designed a mobile VR financial literacy app for AllState. I've also mentored high school students as part of the Science Internship Program at UCSC.

Projects

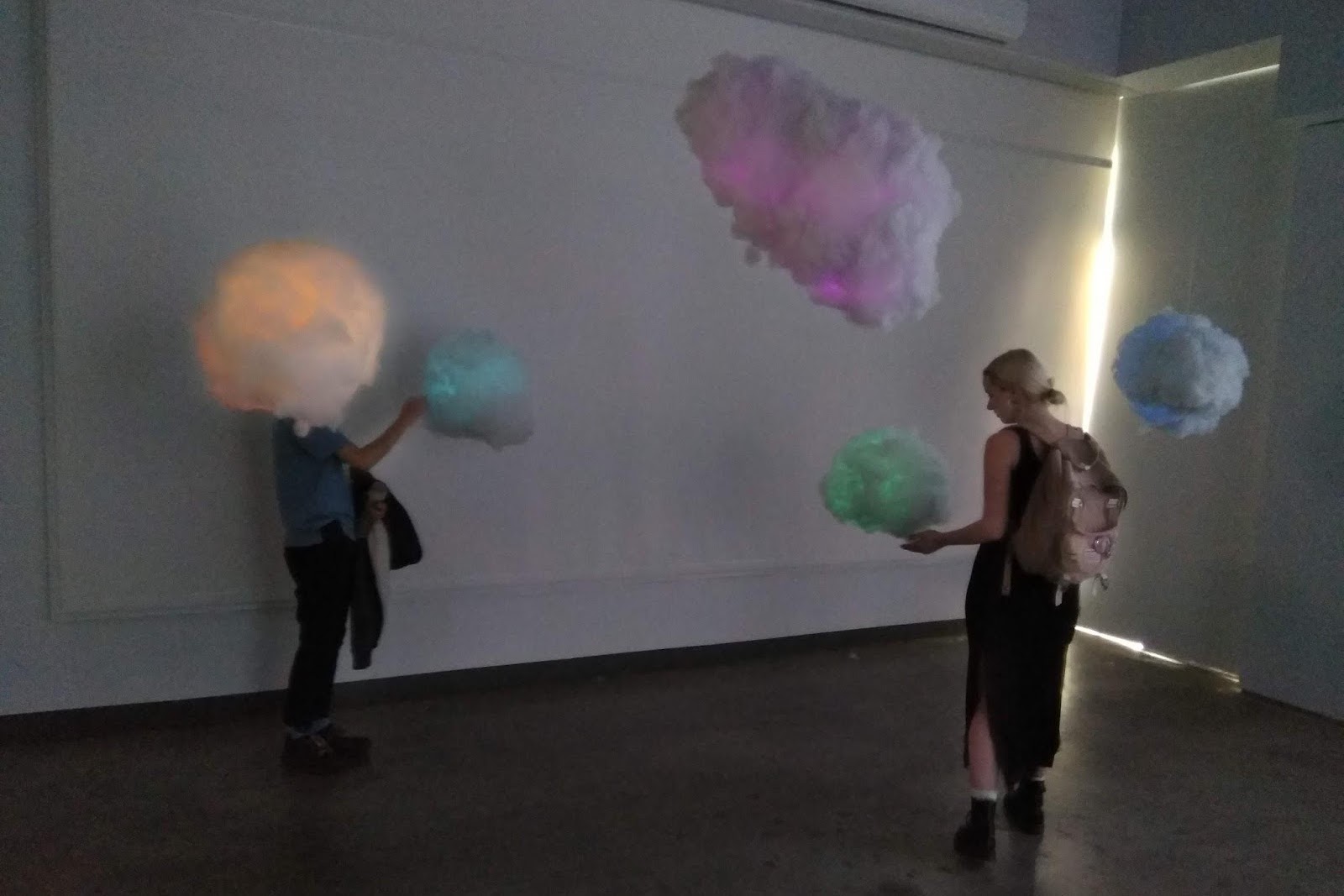

NIMBUS

Nimbus is a set of five reactive LED clouds that were installed in the Fall 2018 UCSC DANM open studios. This installation reacted to the spectators around it. We sought to invite curiosity. The soft texture, placement at eye level, and subtle swaying served as an invitation to come closer. During the open studios, attendees could walk among the clouds and explore the interaction with limited guidance. As people clustered around the pod of clouds and talked amongst themselves they noted that the brightness of the clouds seemed to flutter in time with their words. This was the attendees first invitation to interact with the installation. They would begin talking towards the clouds, clapping, whistling, and even touching the clouds. If they moved the LEDs with any significant amount of force then they would see lightning spring across the surface of the clouds in response. As attendees cycled through the space, they would see others interacting and take that as their own invitations to experiment.

Cloud Construction was done by Evie Chang and Evelyn Barcenes

Electronics fabrication and programming by James Fey

SW4LARP

Under the social wearables project for Katherine Isbister’s SET Lab, I designed hardware to manage in-game stat tracking and communicate these stats to others during Live Action Role Playing (LARP). We worked with LARP designers to develop wearable technology with the goals of augmenting the existing play experience. These devices needed to maintain focus on in-game actions and interpersonal interactions. A main goal of the design was to avoid replacing that social action with the isolating action of managing a personal electronic device. To that end, we examined how placement and mapping of the device and its interface could help to allow clear communication and navigation without having it detract from existing player actions.

SceneSampler

SceneSampler invites people to move through a festival in ways that they wouldn't otherwise to capture traces of the social scene occurring there (in the form of pictures and sounds). The goal of SceneSampler is to give players and spectators a feel for the festival experience as it changes over time. It is a collaborative game for two players where each player gets a tech-enabled prop to aid them in completing game objectives. One wears a 'camera' with an embedded tablet to document scenes and the other carries a sound 'sampler' built using Adafruit components to identify specific types of social sounds. Players get collection challenges--e.g. find a silent crowd or a loud person--that require them to fill up the sampler with the ambient noise at the scene, then to take a snapshot of the scene itself. They then return to home base to share what they found with a human game master. Successful samples are marked on a map of the overall space with a sticker for that particular challenge, so over time, the map builds up a layer of stickers that mark popular festival locations. Captured images are also uploaded onto social media to compete for the most likes overall. Players can take on as many challenges as they like, and can pose for a group picture with a placard showing their accomplishments when done. SceneSampler gives players a fun reason to move together through a social space and captures interesting traces of what's happening there. - Katherine Isbister, Producer and Research Director - Elena Márquez Segura, Design and Research Lead - James Fey, Game Technologist, Design, Staging and Playtesting - Jared Pettitt, Lead Game Technologist, Design, Staging and Playtesting, Video Production - Edward Melcer, Research, Game Technologist, Design, Staging and Playtesting - Samvid Niravbhai Jhaveri, Game Technologist, Staging and Playtesting

Immaterial

For my undergraduate capstone project as part of the Computer Game Design program at UCSC, I worked with a team on a room scale VR puzzle experience called Immaterial. The design addressed many of the VR design tropes that had developed with the current trend towards immersive VR. Most puzzle games give the players a series of mechanics to solve the puzzles but in Immaterial the player exploits virtual reality to do the impossible. Many of the puzzles revolved around examining the relationship between the physical space the player is in as well as the digital space that VR allows players to interface with. We used the existing hardware platform to promote collaboration with others or manipulation of their play space to solve puzzles that would be normally impossible to complete with a single person. For example, a player can leave their controller on the ground or give it to someone else to be in two places at once. Immaterial also explores the disconnect between the virtual and physical space. Players are so immersed in their virtual surroundings they accept the virtual boundaries as if they were physical boundaries. In virtual reality, a locked door will not stop a player because they can walk through it. Virtual reality games have struggled to stop players from using these exploits to cheat in games but Immaterial embraces it. See project site for a list of the full team. Project site: https://immaterialgame.com/ Download here: https://immaterial.itch.io/immaterial Immaterial VR Trailer: https://www.youtube.com/watch?v=6uEISex-F4Y

SCARF

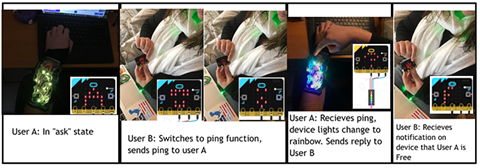

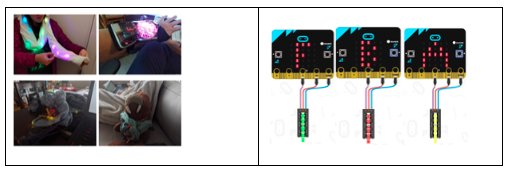

In this time of the COVID-19 pandemic, many of us were able to sequester ourselves in our homes and did our best to carry on our work in some degree of normalcy—but the circumstances had changed for that work. Our workspaces were no longer offices or cubicles, but corners of kitchen tables or converted garages. Any space we could hide away in to conduct our video meetings—where we would apologize when our cats, whom we swore were happily tucked away in a closed bedroom—suddenly jumped into the frame. Our coworkers were now our housemates and families, also all trying to remain productive during this new normal. Working from home blurs social boundaries, alters temporal logics, and forces a review of social interactions. The global pandemic has necessitated working from home for the foreseeable future, and thus presents various challenges of peacefully co-existing in the same space with others in the household. It is difficult to maintain a private workspace free from unwanted interruptions, as well as maintain spatial and social boundaries with others in the household—the breakdown of which might negatively impact relationships. Employing technology as a method to aid in communication might help keep these breakdowns from occurring or give opportunity to add nuanced social cues to interactions amongst friends, family, or those who cohabitate. We created a device that we call SCARF (Social Communication: Affords Real Fashion), which is a social wearable string of LEDs attached to the Micro:Bit that lights up in a specific color to indicate the user’s current state. We wanted our device to not only support communication, but also take into account nuanced interactions that might occur between people in the home, and how this might affect the dynamics of their relationships. We used the “supple” user interface design concept to guide our work, which has three key factors: subtle social signals, emergent dynamics, and moment-to-moment experiences.

The SCARF system involves two users, and a pair of custom devices which can be worn or placed on or near the users’ workspaces throughout the day (see Figure 1 Left). These devices allow each user to toggle between three different pre-specified LED colors to indicate different activity ‘states', as well as use the buttons on their own device to ping the other person’s device to know the state of the corresponding device (see Figure 1 Right). The FREE state indicates that the user has no issue with being interrupted and pulled into conversation. The BUSY state indicates that the person is focused on some task and unavailable for conversation. In these two states, if the device in question is pinged, it automatically replies to the pinging device with a FREE or BUSY status—which puts scrolling text across the screen of the Micro:Bit (see Figure 2). The ASK state is used when a person’s availability is likely to fluctuate and a ping prompts the user in question to press one of two buttons to respond FREE or BUSY.

The device was constructed using a BBC Micro:Bit, a microcontroller compatible with a coding platform called Makecode, and Neopixel Dots, chosen for their robustness and physical flexibility. While the original intended form factor was a scarf, which 3 out of 4 participants used as such, it was intended to be able to be worn in other ways which the user found comfortable. One participant placed the electronics in a runner’s armband due to personal comfort reasons. Outside of the defined functions of the device, the system also included the socially agreed upon meanings of those states, which developed over the course of the deployment.

Figure 1: (Left) Social wearable ‘SCARF’ device and different placements on and off body. (Right) Display of FREE, BUSY, and ASK states.

Figure 1: (Left) Social wearable ‘SCARF’ device and different placements on and off body. (Right) Display of FREE, BUSY, and ASK states.

Publications

Towards Better Understanding Maker Ecosystems

James Fey and Katherine Isbister. 2021. Towards Better Understanding Maker Ecosystems. 1, 1 (March 2021), 6 pages. https://doi.org/10.1145/1122445.1122456

Social Wearables for Edu-larp

James Fey and Katherine Isbister. 2020.

Designing future social wearables with live action role play (larp) designers

Elena Márquez Segura, James Fey, Ella Dagan, Samvid Niravbhai Jhaveri, Jared Pettitt, Miguel Flores, and Katherine Isbister. 2018. Designing Future Social Wearables with Live Action Role Play (Larp) Designers. In Proceedings of the 2018 CHI Conference on Human Factors in Computing Systems (CHI '18). Association for Computing Machinery, New York, NY, USA, Paper 462, 1–14.

Designing and Evaluating 'In the Same Boat', A Game of Embodied Synchronization for Enhancing Social Play

Raquel Breejon Robinson, Elizabeth Reid, James Collin Fey, Ansgar E. Depping, Katherine Isbister, and Regan L. Mandryk. 2020. Designing and Evaluating 'In the Same Boat', A Game of Embodied Synchronization for Enhancing Social Play. In Proceedings of the 2020 CHI Conference on Human Factors in Computing Systems (CHI '20). Association for Computing Machinery, New York, NY, USA, 1–14.

Flippo the Robo-Shoe-Fly: A Foot Dwelling Social Wearable Companion

Ella Dagan, James Fey, Sanoja Kikkeri, Charlene Hoang, Rachel Hsiao, and Katherine Isbister. 2020. Flippo the Robo-Shoe-Fly: A Foot Dwelling Social Wearable Companion. In Extended Abstracts of the 2020 CHI Conference on Human Factors in Computing Systems (CHI EA '20). Association for Computing Machinery, New York, NY, USA, 1–10.

'In the Same Boat', A Game of Mirroring Emotions for Enhancing Social Play

Raquel Breejon Robinson, Elizabeth Reid, Ansgar E. Depping, Regan Mandryk, James Collin Fey, and Katherine Isbister. 2019. 'In the Same Boat',: A Game of Mirroring Emotions for Enhancing Social Play. In Extended Abstracts of the 2019 CHI Conference on Human Factors in Computing Systems (CHI EA '19). Association for Computing Machinery, New York, NY, USA, Paper INT011, 1–4.

MOODsic: A Computational-Musical Augmentation to Support Mindfulness

Ferran Altarriba Bertran, James Fey, and Leya Breanna Baltaxe-Admony. 2019. "MOODsic: A Computational-Musical Augmentation to Support Mindfulness."

The source code for this website is on GitHub.